Are you considering TensorFlow? Let us share some insights from our journey, going from an in-house neural network stack to Google’s off the shelf TensorFlow.

The Visma e-conomic Machine Learning team develops Visma Smartscan, an essential component in several of Visma’s ERPs. Smartscan lets our customers input scanned documents and pdfs, analysing and extracting financial information from them. This eliminates a lot of tedious data entry for our customers.

Development teams should always keep an eye out for improvement potential. Leading up to the fall of 2017, we started seriously considering a brand new stack built on Google’s TensorFlow project. First and foremost, TensorFlow has a lot of momentum, and we might as well exploit that. It’s hard for a team like ours to match the investment Google is making in TensorFlow or the tooling being built around the platform.

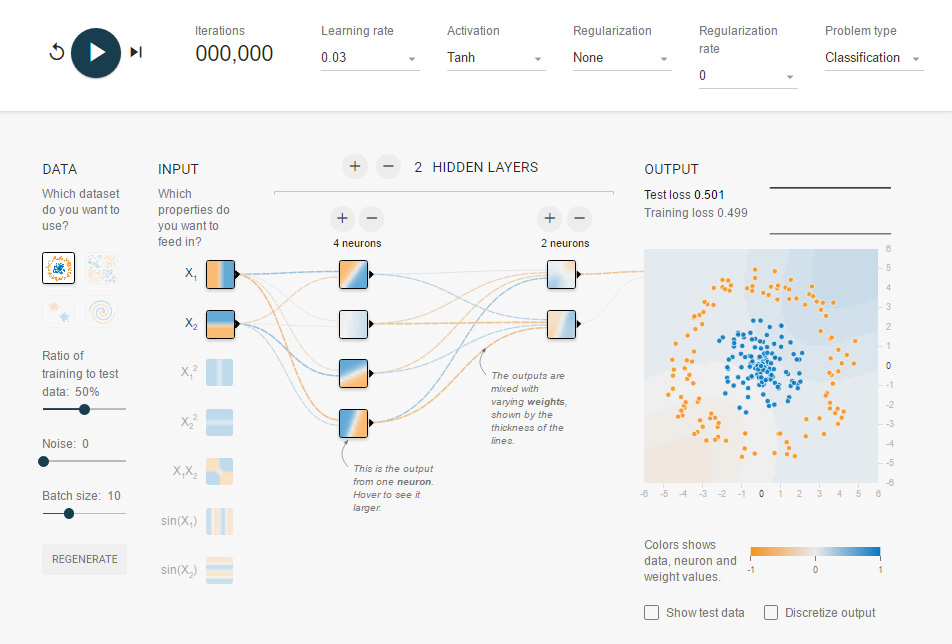

The ergonomics of building models on TensorFlow, the developer experience, exceeds what we built on our own. It’s not just fancy math in software form – there’s TensorBoard for monitoring your training runs, and a great selection of optimization methods.

Our existing platform was beginning to hit the ceiling in terms of training times. TensorFlow is built with scalable training in mind, and the TensorFlow community was already solving that problem for us. In addition, Google has already developed technology that enables us to run TensorFlow models at scale – offering the millions of predictions required every month.

Having a community around a stack is incredibly useful, and the community effect of TensorFlow is strong. When new ideas appear in the literature, implementations of these models quickly appear based on TensorFlow. This makes it a lot easier for us to move our product along with general trends on deep learning.

Last, but not least, TensorFlow runs on mobile! This means we’re now investigating moving machine learning to the edge – doing some of the work in apps on customer devices rather than waiting for an expensive round trip to the server.

That’s a lot to take in! TensorFlow is a big platform with a lot of opportunities. We’re nowhere near done exploiting them – but so far we’ve had a pretty great start.

The team found it really easy to get started. The first prototype models were built very quickly in Keras, validating our decision to switch. We found it convenient to build the real thing in core tensorflow instead of Keras, more because of engineering concerns than machine learning needs. A lot of the infrastructure around TensorFlow is not designed with Keras in mind. The second we decided to make use of a research workflow based on Estimators and the Dataset API, the simplicity of the initial Keras experiments were not really that helpful anymore.

Since switching, we have also added TensorFlow Serving as the solution for production delivery of predictions within Smartscan. To further scale up our model development we have rolled out our own scalable compute farm (based on Kubernetes). For the time being this provides as much of TensorFlow’s scalability as we need.

The TensorFlow project has met all our needs so far! More importantly however, we are definitely feeling strong effects from the TensorFlow project’s momentum, and love the leverage this provides for the team and our customers.