7/10/2021

min read

Innovation and development

This blog post was first published in 2018.

Where we previously released a new version of our cloud services every six weeks on average, we now have many teams who continuously deliver new functionality to our customers, several times a week, and even several times a day.

We have about 40 cross-functional autonomous development teams (we call them Service Delivery Teams) that have full responsibility for both development and operations. With an average of about 9 people in each team, the Service Delivery Team is fully responsible for design, development (including testing), delivery (deployment), monitoring, infrastructure (this term includes both IaaS, PaaS and other resources the service depends on), handling incidents, and everything in between.

In our experience, having all the relevant experts working together in one team which is responsible for “everything”, is not just more efficient (because there are fewer dependencies on other parts of the organisation), but it also results in higher quality cloud services (primarily because multiple types of competence can be utilised throughout the development process).

This transformation did not come easily or quickly. It required us to organise ourselves differently and radically change how we think and how we work.

New technology provided by public cloud platforms like Amazon Web Services and Microsoft Azure, has been essential in enabling us to work how we want to work. So how did this get started and how did we get here?

Origins

In the spring of 2015, a small group of people from different parts of Visma gathered in a workshop in Oslo, Norway. Consciously disregarding the current state of affairs (meaning how we were organised and used to work at the time), we tried to describe what we thought would be the ideal way of building and delivering successful cloud services. Starting from a clean slate, how do we wish we could be organised, and how do we wish we could work?

We did not phrase it like this at the time, but at the end of the initial workshops, we had described a way of working that continue to live on today as our core principles: DevOps, Continuous Delivery and Public Cloud.

DevOps

We wanted to break down any resemblance of silos between development and operations, so we developed the concept of a “Service Delivery Team”, which is similar to a development team, but includes the operational competence necessary for the team to take full responsibility, for both development and operations.

This way, development can take advantage of operational competence, and operations can take advantage of development competence, on a daily basis, early in the process – not as an afterthought.

We gave this type of team a new name to underline that they were different from traditional development teams. The responsibility of a Service Delivery Team is not just to “develop a product”, but to continuously deliver a good service to the customers. A great product is not very valuable if it’s unavailable, doesn’t perform well or delivers a bad user experience.

Monitoring

In order to be more agile, we focused heavily on monitoring so that we could better understand how our cloud services behave and how they are used. Using monitoring tools to gain insights that steer our development process is critical to making sure we spend time on features that are valuable to customers.

Note that a Service Delivery Team is responsible for designing and implementing monitoring on all different levels (for example infrastructure, application, user experience) as well as reacting to alerts and extracting valuable insights.

Automation

Being capable of making small changes to our cloud services, learning from the results of those changes and repeating this cycle frequently became a high priority – but this had many other implications. It meant that we needed to focus a lot more on automated testing, as there would be a lot less time to do manual testing if we were going to release a new version on a daily basis.

It meant that the release process itself had to be fully automated. Even automated infrastructure (infrastructure as code) became a requirement for us.

This meant that infrastructure changes could be automatically applied and verified (similar to application changes), they could be code reviewed (to catch “bugs” and share knowledge), but also that our disaster recovery procedures became more efficient and less error-prone.

Zero downtime

Instead of planning downtime in a scheduled maintenance window for releasing a new version of a cloud service, we needed to be capable of releasing new versions without impacting the end-users.

We refer to this as zero-downtime deployment, and nowadays we support this as a matter of course, and regularly release new versions of our cloud services inside of office hours. If something should go wrong once in a blue moon, all the relevant experts who can help, are at work.

Want to know more about how we work to develop technology that will make businesses more efficient and society better? Read more blog posts within Technology.

Many of the nonfunctional requirements we think are important, are easier to implement on one of the large public cloud platforms, than in less mature cloud platforms. The total self-service nature of public cloud means that a team can more effectively take full responsibility for everything related to infrastructure and operations.

The consumption-based pricing and detailed cost insights makes it easier than ever for teams to experiment and make cost effective decisions when it comes to architecture and operational issues.

Mature APIs and other automation functionality makes it easier to automate in many different areas, be it infrastructure provisioning and configuration (infrastructure as code), deployment, self-healing architecture, disaster recovery, or elastic scaling (meaning continuously and automatically matching provisioned resources with required resources).

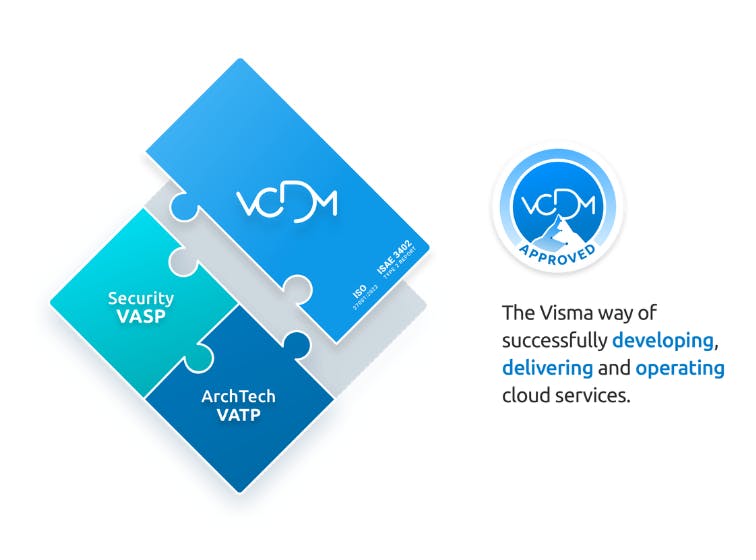

This translates into more efficient teams and more cost efficient and reliable cloud services.After a few initial workshops in the spring of 2015, we had an initial draft of what we called the Visma Cloud Delivery Model (VCDM) which described some core principles, how we should be organised, how we should work and some technical requirements we thought were important for successful cloud service delivery.

We also developed an onboarding process, the primary tool for actually implementing VCDM in our organisation. The purpose of the onboarding process is to start with any team, introduce them to the VCDM principles, processes and requirements, and by the end of it, have them work in full alignment with the model.

One of the first activities for an onboarding team is to ensure they are able to fill the different roles we require in all VCDM Service Delivery Teams.

This includes primary (~full time) roles like VCDM Service Owner (ultimately accountable for “everything” regarding their service), VCDM Infrastructure Engineer (primary operations specialist), VCDM Developers and VCDM Business Analysts as well as part-time roles that typically are combined with a primary role, like VCDM Agile Lead, VCDM Security Engineer and VCDM Incident Coordinator to name a few of them.

Then, the teams are introduced to different processes by dedicated VCDM Process Guides. As an example, a Service Delivery Team that is onboarding to VCDM, will spend one hour with the VCDM Guide for Testing to ensure a common understanding in terms of how to think about and work with automated testing, exploratory testing, performance testing, and so on.

There are similar sessions for development, release, monitoring, security and incident handling. VCDM Guides may assist teams beyond these introductory sessions, if needed.Another important part of the onboarding process, are the self-assessments.

These are continuously updated documents that function as gap analyses for the teams. Teams that onboard to VCDM will do four self-evaluations of their own maturity.

One self-assessment is focused on Continuous Delivery practices, one is focused on service reliability and performance, one is focused on service security and one is about technical debt management.

Why technical debt management? If teams take on technical debt, it should be a conscious decision. All technical debt should be identified, documented and managed according to the associated risks.

Some requirements in these self-assessments are about the team and how they work, while others are about the cloud service and how it works. The teams analyse where they are today compared to where they should be according to VCDM requirements.

Significant deviations have to be resolved by the teams as part of the onboarding process, and this is typically what takes the most time. In our experience, the onboarding process can take anywhere from one month to a year, depending on the maturity of the team and their service.

The onboarding process ensures a minimum maturity level for all teams and services that are aligned with the model. This is how we can be confident that a VCDM onboarded service is secure, reliable, and performs well.

It is how we can be confident that a VCDM Service Delivery Team is capable of efficiently developing and delivering a high quality cloud service, and that they are capable of handling any unforeseen incidents that might happen.

Visma has a tendency to acquire other companies, and VCDM is an excellent alignment tool for us in terms of processes, requirements, mindset and vocabulary.

As an example, all VCDM teams handle incidents in the same way. They use the same template for incident reports, and they can all be found in the same place. Similarly, all VCDM services use the same framework for disaster recovery planning and testing.

Because of this alignment, we are able to gather the same type of data across all VCDM teams and cloud services. Based on this data we have developed different maturity indexes, that measure the maturity of VCDM teams and cloud services within different areas.

Currently, we have one index for security, one for Continuous Delivery and DevOps, and one for architecture and technology. Everyone in Visma has access to these indexes which are calculated on a daily basis, and both the teams themselves as well as their stakeholders pay attention to them which encourages continuous improvement.

A couple of months after the first workshop, we had a model and an onboarding process that was ready to be piloted. Out of three initial pilot teams, two of them completed onboarding in four months, meaning that they were now working in alignment with the model.

One team had to back out from the pilot phase, as they were not mature enough to complete onboarding within a reasonable time frame. We saw that it was very difficult to fulfill the VCDM requirements in a traditional private cloud, with less self-service and automation possibilities.

In the four month pilot period we continued to make changes to the model and the onboarding process, and we have continued to do so ever since. It’s important for us to continuously improve and keep VCDM up to date.

We are now around 12 people who work on continuously updating and improving the Visma Cloud Delivery Model as well as guiding teams through the onboarding process. We are continuously gathering feedback from the onboarded teams on how we can improve, striving to minimise any overhead and maximise the value of the model for the teams and for Visma.

To that end, we have a structured retrospective process, and role-based competence forums that ensure experience sharing and organisational learning. We also use these forums to inform about and ensure adoption of changes to the model.It has been quite the journey since the spring of 2015.

About 40 teams have onboarded to the model so far, and there are at least 40 more in the pipeline. I’m excited to see where we will be in another three and a half years.

Voice of Visma

Welcome to the Voice of Visma podcast, where we sit down with the business builders, entrepreneurs, and innovators across Visma, sharing their perspectives on how they scale companies, reshape industries, and create real customer value across markets.

Cloud,Cloud computing,Cloud technology